PBG Analysis – October Edition

Revolutionizing Portfolio Management: How Machine Learning Supercharges Markowitz’s Classic Framework

Dear PBG.io Community,

In today’s dynamic financial landscape—where data pours in torrents and market regimes can shift overnight—traditional quantitative tools need an upgrade. That’s the message of a landmark paper titled “Enhancing Markowitz’s Portfolio Selection Paradigm with Machine Learning” by (López de Prado, Simonian, Fabozzi, & Fabozzi, 2025).

The authors revisit Harry Markowitz’s 1952 mean–variance optimization model—the foundation of modern portfolio theory—and show how machine learning (ML) can enrich, rather than replace, this framework. Their central idea: combine econometric rigor with datadriven intelligence to create more adaptive, robust portfolios suited to today’s nonlinear (1) and high-dimensional (involving a very large number of variables or features) markets.

At PBG.io, this research resonates deeply with our mission. Several of these methodologies already power our quantitative analysis, while others guide our roadmap for the next wave of implementation. Below is an accessible yet conceptually faithful walkthrough of the paper’s core insights.

The Foundation: Why Markowitz Needs ML

Markowitz framed portfolio construction as an optimization problem: minimize variance (2) for a given expected return or maximize return for a given level of risk. The challenge today is that markets are noisy, data is vast, and linear assumptions (3) often fail. Machine learning shifts the paradigm from “data modeling”—which assumes a specific stochastic process (4)—to “algorithmic modeling,” which focuses on prediction and pattern recognition even when the underlying process is unknown. This shift enables practitioners to extract alpha (5), model nonlinear dependencies, and manage risk in ways econometrics alone cannot.

Signal Generation: Finding Structure in the Noise

Investment signals—quantitative or qualitative—guide decision-making. ML expands the signal-generation toolkit far beyond regression and ARIMA (6) models:

- Supervised learning (e.g., support vector machines, random forests) predicts labels or values such as expected returns.

- Unsupervised learning (e.g., k-means, hierarchical clustering) reveals hidden structures like volatility regimes.

- Reinforcement learning teaches agents (7) to maximize cumulative rewards through trial and error.

- Neural networks (including LSTM and CNN architectures) uncover temporal and nonlinear patterns in price data.

The authors emphasize rigorous evaluation metrics—precision, recall, F1-score, and accuracy—to validate predictive power.

At PBG.io, supervised and unsupervised ML already support our signal-detection layer for identifying mispriced or momentum-driven assets (8), and we’re prototyping reinforcementlearning models to make our trading systems self-adaptive.

Feature Selection: Distilling the Factor Universe

Not every variable adds value. Including too many can cause overfitting—a model that fits history but fails in reality. Techniques such as LASSO and elastic net regularization shrink or remove weak predictors, while tree-based models (e.g., random forests) provide featureimportance rankings that help isolate the most relevant drivers of return. The paper illustrates how these methods tame the notorious “factor zoo” by identifying a parsimonious (9) subset of explanatory features.

At PBG.io, we employ and continue to evaluate techniques such as LASSO and randomforest filtering to ensure our multi-asset strategies emphasize the most informative risk premia—such as momentum, carry, or value—while minimizing redundancy. Neural networks for nonlinear feature discovery are next on our roadmap.

Portfolio Optimization: Solving Markowitz’s Weaknesses

The authors systematically dissect Markowitz’s main limitations and the ML-based solutions now addressing them:

- Noise-induced instability — Empirical covariance matrices (10) are notoriously noisy. Random Matrix Theory (RMT) and López de Prado’s residual eigenvalue denoising techniques filter out eigenvalues consistent with randomness, improving out-ofsample stability, that is, how well a model performs on unseen data.

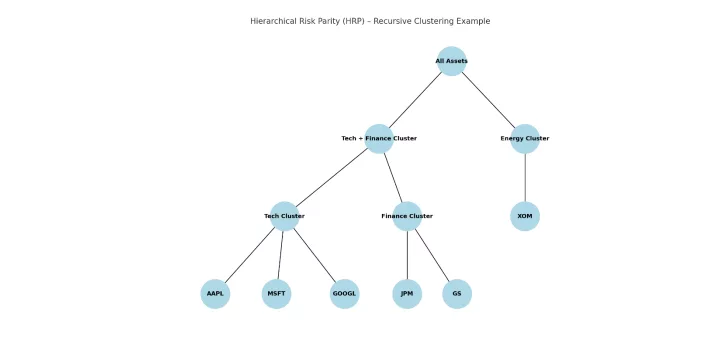

- Input uncertainty — Covariance-matrix inversion magnifies estimation error. Hierarchical Risk Parity (HRP) replaces inversion with recursive clustering, generating diversified allocations that outperform standard minimum-variance portfolios out of sample.

- Signal-induced instability (“Markowitz’s curse”) — When assets are highly correlated, small parameter shifts can cause large weight swings. Nested Clustered Optimization (NCO) mitigates this by optimizing within and across clusters, cutting portfolio RMSE (11) by more than half relative to classical Markowitz solutions.

- IID and normality assumptions — Markets exhibit fat tails (12) and regime shifts (13). ML allows regime-aware (14) and non-Gaussian modeling via random forests, spectral clustering, and reinforcement learning for dynamic risk budgeting (15).

- Single-period limitations — Most investors face multi-horizon decisions. Advances like hyperparameter (16) optimization, Bayesian graphical models, and even quantum annealing now extend optimization across time.

At PBG.io, HRP will underpin our current risk-allocation engine, lowering drawdowns and turnover (17). NCO and regime-aware extensions are actively under development to improve resilience during volatile market transitions.

Stress Testing: Learning from Extremes

Traditional stress tests extrapolate from history. ML adds realism by identifying hidden clusters (18) and anomalies that signal potential tail risks.

Methods like Gaussian Mixture Models (GMM) and DBSCAN reveal data-driven regimes or outliers corresponding to stress environments—insights already adopted by firms like Two Sigma (19). PBG.io is analyzing the use of similar clustering techniques to simulate market shocks and exploring generative models to create synthetic “what-if” scenarios for extremeevent planning.

NLP and Generative AI: Turning Text into Alpha

Machine learning’s frontier now extends to Natural Language Processing (NLP) and Generative Language Models (GLMs) such as GPT. These models extract sentiment and event information from unstructured sources—earnings calls, filings, or news—and convert them into quantitative signals.

They can also generate synthetic market narratives for model training or scenario analysis. At PBG.io, sentiment analytics already complement our quantitative filters; GLM-driven event detection and data simulation are slated for future integration.

Challenges and the Road Ahead

The authors remind us that ML is no panacea. Overfitting, data quality, scalability, and regulatory transparency remain real hurdles. Yet, with sound cross validation, explainableAI techniques, and disciplined governance, ML can be responsibly embedded into the investment process.

For PBG.io, this paper validates our hybrid philosophy: blending classical finance with advanced data science to construct portfolios that are not just optimized, but adaptive. As we continue integrating NCO, regime-aware ML, and generative analytics, our goal remains clear—deliver robust, risk-balanced performance in an ever-evolving market.

If you’d like to explore these concepts or discuss how they shape your portfolio, we’d love to hear from you.

Best regards,

The PBG.io Team

1- Relationships that are not strictly proportional or constant

2- A statistical measure of how far returns deviate from their mean

3- The idea that relationships between variables are straight-line and constant

4- A random or probabilistic process that generates data over time

5- The portion of excess return above a benchmark

6- Autoregressive Integrated Moving Average, a classical time-series forecasting model

7- Autonomous decision-makers

8- Assets whose prices continue to move in the same direction due to market momentum

9- A parsimonious subset includes only the necessary number of variables to explain a phenomenon effectively

10- Matrices estimated from observed data that describe how assets move together

11- Root Mean Square Error—a measure of average prediction error

12- Extreme events occurring more frequently than under a normal distribution

13- Abrupt changes in market behavior, such as from bull to bear phases

14- “Regime-aware” models recognize and adapt to different market regimes or environments, adjusting their behavior according to context

15- Continuously adjusting how risk is distributed across assets based on changing conditions

16- A hyperparameter is a setting chosen before training that controls how a model learns.

17- The rate at which portfolio holdings are traded or replaced

18- Groups or patterns in the data that aren’t immediately visible

19- A leading quantitative hedge fund known for using advanced data science and AI in systematic investing

This content is informational only and does not constitute financial advice, an offer, or a solicitation to invest. Cryptoassets are volatile and may result in total loss of capital. Past performance is not indicative of future results. Subject to applicable regulation. Do your own assessment before investing.